Large Language Models (LLMs)

Nothing is stopping you from integrating Prodigy with services that can help you annotate. This includes services that provide large language models, like OpenAI, that offer zero-shot learning. Prodigy version 1.12 provides a few integrated recipes to help you get started. These recipes should be seen as a preview of what’s possible with large language models and while they currently interface with OpenAI it’s very likely that we’ll offer more integrations in the future.

Quickstart

You can use the ner.openai.correct and ner.openai.fetch recipes to

pre-highlight text examples with NER annotations. These annotations typically

deserve a review, but OpenAI is able to generate annotations for many entities

that are not supported out of the box by pretrained spaCy pipelines.

You can learn more by checking the named entity section on this page.

You can use the textcat.openai.correct and textcat.openai.fetch

recipes to attach class predictions to text examples. These annotations

typically deserve a review, but OpenAI is able to generate labels that don’t

require you to train your own model beforehand.

You can learn more by checking the text classification section on this page.

The terms.openai.fetch recipe can take a simple prompt and generate a

terminology list for you.

You can learn more by checking the terminology section on this page.

The ner.openai.fetch and textcat.openai.fetch recipes allow you to

download predictions from OpenAI upfront. These predictions won’t be perfect,

but they might allow you to select an interesting subset for manual review in

Prodigy.

The most compelling use case for this is when you’re dealing with a rare label. Instead of going through all the examples manually you could instead only check the examples in which OpenAI predicts the label of interest.

Be aware that it can be expensive to send many queries to OpenAI, but it can be worth the investment if this is a method to help you get started.

OpenAI supports many different languages out of the box, but English is the language that it seems to support best. The Prodigy recipes assume English as a default, but the recipes do offer you to write your own custom prompts for other languages.

The custom prompts section on this page gives more details on this.

There can be good reasons to write custom prompts for OpenAI.

To help with this, Prodigy provies the ab.openai.prompts recipe, which

allows you to quickly compare the quality of outputs from two OpenAI prompts.

You can learn more about this recipe in the

a/b evaluation section on this page.

How do these recipes work?

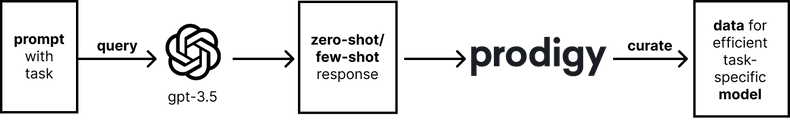

Large language models, like those offered by OpenAI, can be used for text completion tasks. They allow you to input some text as a prompt, and the model will generate text completion that tries to match whatever context was given.

This text-in, text-out interface means you can try to engineer a prompt that the large language model can use to perform a specific task that you’re interested in. While this approach is not perfect and part of on-going research, it does offer a general method to construct prompts for many typical NLP tasks such as NER or text classification.

Prodigy, as of v1.12, offers six recipes that interact with OpenAI. All of these recipes handle the prompt generation from OpenAI as well as the response parsing in order to help you annotate.

ner.openai.correctreview NER annotations performed by OpenAIner.openai.fetchdownload NER annotations by OpenAI in batch upfronttextcat.openai.correctreview text classification annotations performed by OpenAItextcat.openai.fetchdownload text classification annotations by OpenAI in batch upfrontterms.openai.fetchretreive terminology lists based on a queryab.openai.promptscompare prompts for OpenAI in a blind taste test

The integration with Prodigy is meant to aid the human in the loop by providing pre-annotated examples by OpenAI. The goal of the available recipes is to help you get started quicker but the recipes themselves, including their prompts, can be fully customized too.

Getting Started with OpenAI and Prodigy New: 1.12

If you want to get started with OpenAI and Prodigy you’ll want to set everything up so that you can work swiftly but also securely.

-

Account Setup: You will need to set up an account for OpenAI, which you can do here. You can choose to use a free account as you’re testing the service but you can consider paying as you go as well. Their pricing page gives lots of details. Be aware that new accounts have some extra rate-limit restrictions which are described in detail here.

-

Keys and a

.envfile: Once your account is set up it’s time to set up API keys, which you can do here.The Prodigy recipes will assume that your keys are stored in a local

.envfile. It needs to contain aPRODIGY_OPENAI_KEY, which you’ve just created and aPRODIGY_OPENAI_ORGwhich you can find here. This is what the.envfile would look like:.env

PRODIGY_OPENAI_ORG = "org-..." PRODIGY_OPENAI_KEY = "sk-..."You should make sure that this dotenv file is added to a

.gitignoresuch that it never gets uploaded. If somebody were to gain access to this key they might incur costs on your behalf with it. -

Keep an eye on costs: the OpenAI service will incur costs on every request that you make. In order to prevent a large unepexted bill, we recommend setting a spending cap on the API. This can make sure you never spend more than a predefined amount per month. You can configure this by going to the usage limits section of the account page on OpenAI.

-

(Optional) Customise a recipe: The recipes provided by Prodigy are designed to be generally useful, but there can be good reasons to go beyond the zero-shot defaults. The NER and textcat recipes allow you to contribute examples to the prompt which might improve the output of OpenAI. These recipes also allow you to write custom prompts for OpenAI, which can be relevant if you’re interested in generating responses non-English languages.

Usage

This section explains how each OpenAI recipe works in detail by describing the prompt that is sent to OpenAI as well as the response that is returned.

OpenAI for NER New: 1.12

The ner.openai.correct and ner.openai.fetch recipes leverage

OpenAI to pre-annotate named entities in text that you provide. As a motivating

example, let’s assume that we have a set of example texts that contain comments

on a food recipe blog. It might have an example that looks like this:

examples.jsonl{

"text": "Sriracha sauce goes really well with hoisin stir fry, but you should add it after you use the wok."

}

Given an examples file, you can use ner.openai.correct to help with

annotating.

Example

prodigy ner.openai.correct recipe-ner examples.jsonl --label "dish,ingredient,equipment"

Internally, this recipe will take the provided labels ("dish", "ingredient"

and "equipment") together with the provided text in each example to generate a

prompt for OpenAI. Here’s what such a prompt would look like:

NER prompt sent to OpenAIFrom the text below, extract the following entities in the following format:

dish: <comma delimited list of strings>

ingredient: <comma delimited list of strings>

equipment: <comma delimited list of strings>

Text:

"""

Sriracha sauce goes really well with hoisin stir fry, but you should add it after you use the wok.

"""

This prompt is sent to OpenAI, which will return with a response. This response is not deterministic, but it might look something like this:

NER response from OpenAIdish: hoisin stir fry

ingredient: Sriracha sauce

equipment: wok

If you use the ner.openai.correct recipe then you’ll be able to see this

prompt and response from inside Prodigy.

Example of OpenAI interface

Because this example is annotated correctly, you can simply accept the annotation without having to use your mouse to annotate the entities. This can save a tremendous amount of time, but it should be stressed that the OpenAI annotations can be wrong. You still want to be in the loop to curate the annotations.

Alternatively, you can also use ner.openai.fetch to download these

annotations on disk such that you can later review them with the

ner.manual recipe. This interface won’t give you the prompt information,

but does allow you to call OpenAI once even if you want to show the data to

multiple annotators.

Example

prodigy ner.openai.fetch examples.jsonl ner-annotated.jsonl "dish,ingredient,equipment" 100%|████████████████████████████| 50/50 [00:12<00:00, 3.88it/s]

OpenAI for Text Classification New: 1.12

The textcat.openai.correct and textcat.openai.fetch recipes

leverage OpenAI to attach classification labels in text that you provide. As a

motivating example, let’s assume that we have a set of example texts that

contain comments on a food recipe blog. It might have an example that looks like

this:

examples.jsonl{"text": "Cream cheese is really good in mashed potatoes."}

Given an examples file, you can use textcat.openai.correct to help with

annotating labels.

Example

prodigy textcat.openai.correct recipe-comments-textcat examples.jsonl --label "recipe,feedback,question"

Internally, this recipe will take the provided labels ("recipe", "feedback"

and "question") together with the provided text in each example to generate a

prompt for OpenAI. Here’s what such a prompt would look like:

Textcat prompt sent to OpenAIClassify the text below to any of the following labels: recipe, feedback, question

The task is non-exclusive, so you can provide more than one label as long as they are comma-delimited.

For example: Label1, Label2, Label3.

Your answer should only be in the following format:

answer:

reason:

Here is the text that needs classification

Text:

"""

Cream cheese is really good in mashed potatoes.

"""

This prompt is sent to OpenAI, which will return with a response. This response is not deterministic, but it might look something like this:

Textcat response from OpenAIAnswer: Feedback

Reason: The text does not provide instructions on how to make something, nor does it ask a question. Instead, it

provides an opinion on the use of cream cheese in mashed potatoes.

If you use the textcat.openai.correct recipe then you’ll be able to see

this prompt and response from inside Prodigy.

The resulting interface in Prodigy.

Alternatively, you can also use textcat.openai.fetch to download these

annotations on disk such that you can later review them with the

textcat.manual recipe. This interface won’t give you the prompt

information, but does allow you to call OpenAI once even if you want to show

the data to multiple annotators.

Example

prodigy textcat.openai.fetch examples.jsonl textcat-annotated.jsonl --label "recipe,feedback,question" 100%|████████████████████████████| 50/50 [00:12<00:00, 3.88it/s]

OpenAI for Terminology Lists New: 1.12

The terms.openai.fetch recipe can generate terms and phrases obtained

from OpenAI. These terms can then be curated and turned into patterns files,

which can help with downstream annotation tasks.

To get started, you need to make a query to send to OpenAI. The example below demonstrates how to generate at least 100 examples of “skateboard tricks”.

Example

prodigy terms.openai.fetch "skateboard tricks" skateboard-tricks.jsonl --n 100

Alternatively, you may also want to steer the response from OpenAI by providing

some examples. You can add such seed terms via the --seeds option.

Example with seeds

prodigy terms.openai.fetch "skateboard tricks" skateboard-tricks.jsonl --n 100 --seeds "ollie,kickflip"

If you would like to generate more examples to add to the generated file, you

can re-run the same command with the --resume flag. This will re-use the

existing examples as seeds for the prompt to OpenAI.

Example that resumes an existing file

prodigy terms.openai.fetch "skateboard tricks" skateboard-tricks.jsonl --n 50 --resume

After generating the examples, you’ll have a skateboard-tricks.jsonl file that

has contents that might look like this:

{"text":"pop shove it","meta":{"openai_query":"skateboard tricks"}}

{"text":"switch flip","meta":{"openai_query":"skateboard tricks"}}

{"text":"nose slides","meta":{"openai_query":"skateboard tricks"}}

{"text":"lazerflip","meta":{"openai_query":"skateboard tricks"}}

{"text":"lipslide","meta":{"openai_query":"skateboard tricks"}}

Given such a file you’re able to review the generated terms via the

textcat.manual recipe.

Example

prodigy textcat.manual skateboard-tricks skateboard-tricks.jsonl --label tricks

Annotating terms

From this interface you can manually accept or reject each example. Then, when

you’re done annotating, you can export the annotated text into a patterns file

via the terms.to-patterns recipe.

Example

prodigy terms.to-patterns skateboard-tricks ./skateboard-patterns.jsonl --label skateboard-trick --spacy-model blank:en ✨ Exported 129 patterns ./skateboard-patterns.jsonl

This will generate a file with patterns, like those shown below.

{"label":"skateboard-trick","pattern":[{"lower":"pop"},{"lower":"shove"},{"lower":"it"}]}

{"label":"skateboard-trick","pattern":[{"lower":"switch"},{"lower":"flip"}]}

{"label":"skateboard-trick","pattern":[{"lower":"nose"},{"lower":"slides"}]}

{"label":"skateboard-trick","pattern":[{"lower":"lazerflip"}]}

{"label":"skateboard-trick","pattern":[{"lower":"lipslide"}]}

From here, the skateboard-patterns.jsonl file can be used in recipes, like

ner.manual, to make the annotation task easier.

A/B testing Custom Prompts New: 1.12

The ab.openai.prompts recipe allows you to quickly compare the quality of

outputs from two OpenAI prompts in a quantifiable and blind way.

As an example, let’s assume that we want to write humerous haikus about a given topic. Then you could create two jinja templates that can each accept a topic, yet construct a different prompt.

prompt1.jinja2Write a haiku about {{topic}}.

prompt2.jinja2Write a hilarious haiku about {{topic}}.

Next, you’ll want to have an input file in the appropriate format to feed these

templates. The ab.openai.prompts recipe assumes data to be in the

following format.

topics.jsonl{"id": 0, "prompt_args": {"topic": "Python"}}

{"id": 0, "prompt_args": {"topic": "star wars"}}

{"id": 0, "prompt_args": {"topic": "maths"}}

Finally, it helps to also have a template that can add context to the annotation interface for the user. You can define another jinja template for that.

display-template.jinja2Select the best haiku about {{topic}}.

When you put all of these templates together you can start annotating. The

command below starts the annotation interface and also uses the --repeat 4

option. This will ensure that each topic will be used to generate a prompt at

least 4 times.

Example

prodigy ab.openai.prompts haiku topics.jsonl display-template.jinja2 prompt1.jinja2 prompt2.jinja2 --repeat 4

This will generate an annotation interface like below.

Example

When you’re done annotating, the terminal will also summarise the results for you.

Output after annotating

=============== ✨ Evaluation results ===============

✔ You preferred prompt1.jinja2 prompt1.jinja2 11 prompt2.jinja2 5

Tournaments New: 1.12

Instead of comparing two examples with eachother, you can also create a

tournament to compare any number of prompts via the ab.openai.tournament

recipe. This recipe works just like the ab.openai.prompts recipe but it

allows you to pass a folder of prompts to create a tournament. The recipe will

then internally use

the Glicko ranking system to keep

track of the best performing candidate and will select duels between prompts

accordingly.

Just like in the ab.openai.prompts recipe you’d need prompts in .jinja2

files, but now they can reside in a folder.

prompt_folder/prompt1.jinja2Write a haiku about {{topic}}.

prompt_folder/prompt2.jinja2Write a hilarious haiku about {{topic}}.

prompt_folder/prompt2.jinja2Write a super funny haiku about {{topic}}.

You will also need to have a .jsonl file that contains the required prompt

arguments.

topics.jsonl{"id": 0, "prompt_args": {"topic": "Python"}}

{"id": 0, "prompt_args": {"topic": "star wars"}}

{"id": 0, "prompt_args": {"topic": "maths"}}

Finally, it also helps to have a template that can add context to the annotation interface for the user. You can define another jinja template for that.

display-template.jinja2Select the best haiku about {{topic}}.

When you put all of these templates together you can start a tournament that will match prompts for comparison. The tournament recipe will use each annotation to update it’s internal belief of prompt performance to decide which prompts to match in the next round.

Example

prodigy ab.openai.tournament haiku-tournament input.jsonl title.jinja2 prompt_folder

This will generate the same annotation interface like below.

Example

As you annotate in the terminal you’ll also receive feedback on the prompt performance. Early on in the annotation process this feedback might look like this:

=================== Current winner: prompt3.jinja2 ===================

desc value

P(prompt3.jinja2 > prompt1.jinja2) 0.60

P(prompt3.jinja2 > prompt2.jinja2) 0.83

But as you add more annotations the recipe will update its belief of ratings over all of the available prompts. So after a while, the feedback might look more like this:

=================== Current winner: prompt3.jinja2 ===================

desc value

P(prompt3.jinja2 > prompt1.jinja2) 0.94

P(prompt3.jinja2 > prompt2.jinja2) 0.98

Adding Examples for Few Shot Prompts

OpenAI will make mistakes at times. However, you can attempt to steer the large language model in the right direction by providing some examples that it got wrong before. The named entitiy and text classification recipes provided by Prodigy can take such examples and make sure that they appear in the prompt in the right format.

The sections below explain how to do this and also how the mechanic works in more detail.

NER

As a motivating example, let’s assume that we have a set of example texts that contain comments on a food recipe blog. It might have an example that looks like this:

examples.jsonl{

"text": "Sriracha sauce goes really well with hoisin stir fry, but you should add it after you use the wok."

}

Given such an examples file, you can use ner.openai.correct to help

annotating named entities.

Example

prodigy ner.openai.correct recipe-ner examples.jsonl --label "dish,ingredient,equipment"

This will internally generate a prompt for OpenAI.

Prompt sent to OpenAIFrom the text below, extract the following entities in the following format:

dish: <comma delimited list of strings>

ingredient: <comma delimited list of strings>

equipment: <comma delimited list of strings>

Text:

"""

Sriracha sauce goes really well with hoisin stir fry, but you should add it after you use the wok.

"""

This prompt might be sufficient, but if you find examples where OpenAI makes a

lot of mistakes then it might be good to save these so that you may add them to

the prompt. To do this, you can create a ner-examples.yaml file, which might

have the following format:

ner-examples.yaml- text: "You can't get a great savory flavor with carob."

entities:

dish: []

ingredient: ['carob']

equipment: []

- text: "You can probably sand-blast it if it's an anodized aluminum pan."

entities:

dish: []

ingredient: []

equipment: ['anodized aluminum pan']

Next, you can add these examples to the recipe via:

Example

prodigy ner.openai.correct recipe-ner examples.jsonl --label "dish,ingredient,equipment" --examples-path ./ner-examples.yaml"

With these examples added, the prompt will update and contain the examples in the right format.

Prompt sent to OpenAIFrom the text below, extract the following entities in the following format:

dish: <comma delimited list of strings>

ingredient: <comma delimited list of strings>

equipment: <comma delimited list of strings>

For example:

Text:

"""

You can't get a great chocolate flavor with carob.

"""

dish:

ingredient: carob

equipment:

Text:

"""

You can probably sand-blast it if it's an anodized aluminum pan.

"""

dish:

ingredient:

equipment: anodized aluminum pan

Here is the text that needs predictions.

Text:

"""

Sriracha sauce goes really well with hoisin stir fry, but you should add it after you use the wok.

"""

Maximum number of examples

Because the size of the prompt sent to OpenAI will contribute to more costs the

recipes also provide a --max-examples argument that will limit the number of

examples added to each prompt. It will randomly select examples on each request

in that case.

Text Classification

As a motivating example, let’s assume that we have a set of example texts that contain comments on a food recipe blog. It might have an example that looks like this:

examples.jonl{"text": "Cream cheese is really good in mashed potatoes."}

Given such an examples file, you can use textcat.openai.correct to help

annotate classification labels.

Example

prodigy textcat.openai.correct recipe-comments-textcat comments.jsonl --label "recipe,feedback,question"

This command will internally generate the following prompt for OpenAI.

Generated OpenAI promptClassify the text below to any of the following labels: recipe, feedback, question

The task is non-exclusive, so you can provide more than one label as long as they are comma-delimited.

For example: Label1, Label2, Label3.

Your answer should only be in the following format:

answer:

reason:

Here is the text that needs classification

Text:

"""

Cream cheese is really good in mashed potatoes.

"""

You can add examples to this prompt but you need to be mindful of the type of classification task. Because the current task is a multilabel classification task we need to provide examples in the following format.

textcat-multilabel.yaml- text: 'Can someone try this recipe?'

answer: 'question'

reason: 'It is a question about trying a recipe.'

- text:

'1 cup of rice then egg and then mix them well. Should I add garlic last?'

answer: 'question,recipe'

reason: 'It is a question about the steps in making a fried rice.'

You can pass this file along via the --examples-path flag.

Example

prodigy textcat.openai.correct recipe-comments-textcat comments.jsonl --label "recipe,feedback,question" --examples-path ./textcat-multilabel.yaml"

With these arguments, we will send a larger prompt to OpenAI.

Generated OpenAI prompt with multi-label examplesClassify the text below to any of the following labels: recipe, feedback, question

The task is non-exclusive, so you can provide more than one label as long as they are comma-delimited.

For example: Label1, Label2, Label3.

Your answer should only be in the following format:

answer:

reason:

Below are some examples (only use these as a guide):

Text:

"""

Can someone try this recipe?

"""

answer: question

reason: It is a question about trying a recipe.

Text:

"""

1 cup of rice then egg and then mix them well. Should I add garlic last?

"""

answer: question,recipe

reason: It is a question about the steps in making a fried rice.

Here is the text that needs classification

Text:

"""

Cream cheese is really good in mashed potatoes.

"""

Binary Labels

The previous example mentioned a multi-label example. However, it is also possible that you’re working on a strictly binary classification task. In that situation the examples need to be slightly different because OpenAI needs to strictly “accept” or “reject” an example.

To help explain this, let’s have a look at a default call for a binary task.

Example

prodigy textcat.openai.correct recipe-comments-textcat comments.jsonl --label "recipe"

This will generate the following prompt.

Generated OpenAI promptFrom the text below, determine whether or not it contains a recipe. If it is

a recipe, answer "accept." If it is not a recipe, answer "reject."

Your answer should only be in the following format:

answer:

reason:

Text:

"""

Cream cheese is really good in mashed potatoes.

"""

Like before, you’ll want to create a file that contains the examples that you want to add to the prompt.

textcat-binary.yaml- text: 'This is a recipe for scrambled egg: 2 eggs, 1 butter, batter them, and then fry in a hot pan for 2 minutes'

answer: 'accept'

reason: 'This is a recipe for making a scrambled egg'

- text: 'This is a recipe for fried rice: 1 cup of day old rice, 1 butter, 2 cloves of garlic: put them all in a wok and stir them together.'

answer: 'accept'

reason: 'This is a recipe for making a fried rice'

- text: "I tried it and it's not good"

answer: 'reject'

reason: "It doesn't talk about a recipe."

And when we now use it via this prompt:

Example

prodigy textcat.openai.correct recipe-comments-textcat comments.jsonl --label "recipe" --examples-path ./textcat-binary.yaml"

The prompt will update and look like this:

Generated OpenAI prompt with binary examplesFrom the text below, determine whether or not it contains a recipe. If it is

a recipe, answer "accept." If it is not a recipe, answer "reject."

Your answer should only be in the following format:

answer:

reason:

Below are some examples (only use these as a guide):

Text:

"""

This is a recipe for scrambled egg: 2 eggs, 1 butter, batter them, and then fry in a hot pan for 2 minutes

"""

answer: accept

reason: This is a recipe for making a scrambled egg

Text:

"""

This is a recipe for fried rice: 1 cup of day old rice, 1 butter, 2 cloves of garlic: put them all in a wok and stir them together.

"""

answer: accept

reason: This is a recipe for making a fried rice

Text:

"""

I tried it and it's not good

"""

answer: reject

reason: It doesn't talk about a recipe.

Here is the text that needs classification

Text:

"""

Cream cheese is really good in mashed potatoes.

"""

Maximum number of examples

Because the size of the prompt sent to OpenAI will contribute to more costs the

recipes also provide a --max-examples argument that will limit the number of

examples added to each prompt. It will randomly select examples on each request

in that case.

Making Custom Prompts

The recipes that Prodigy provides have been designed to work for an English use case. However, you might be interested in using these recipes for another language. If that’s the case you could try to design your own prompts to suit your specific use-case.

Prompts for NER

In order to customise the NER prompt, it helps to understand the Jinja template that Prodigy uses internally.

ner.jinja2From the text below, extract the following entities in the following format:

{# whitespace #}

{%- for label in labels -%}

{{label}}:

{# whitespace #}

{%- endfor -%}

{# whitespace #}

{# whitespace #}

{%- if examples -%}

{# whitespace #}

For example:

{# whitespace #}

{# whitespace #}

{%- for example in examples -%}

Text:

"""

{{ example.text }}

"""

{# whitespace #}

{%- for label, substrings in example.entities.items() -%}

{{ label }}: {{ ", ".join(substrings) }}

{# whitespace #}

{%- endfor -%}

{# whitespace #}

{% endfor -%}

{%- endif -%}

{# whitespace #}

This is the example that needs prediction.

{# whitespace #}

{# whitespace #}

Text:

"""

{{text}}

"""

This template is able to take an input text, labels and examples to turn it into a prompt for OpenAI. You can run the following Python code yourself to get a feeling for it.

import jinja2

import pathlib

template_text = pathlib.Path("ner.jinja2")

template = jinja2.Template(template_text).read_text()

template.render(

text="Steve Jobs founded Apple in 1976.",

labels=["name", "organisation"]

)

This example would generate the following prompt.

OpenAI PromptFrom the text below, extract the following entities in the following format:

name:

organisation:

This is the example that needs prediction.

Text:

"""

Steve Jobs founded Apple in 1976.

"""

You’re free to take the original prompt and to make changes to it. You can add details relevant to your domain or you can change the language of the prompt. Just make sure that you don’t change the names of the rendered variables and that you don’t change the structure of the prompt. The result from OpenAI will still need to get parsed and if OpenAI sends a response in an unexpected format the recipe won’t be able to render it.

In the case of NER the template assumes that labels is a list of strings

referring to label names and text refers to the text of the current annotation

example. The example variable refers to an example that is passed to steer the

prompt. The NER examples gives more details on the expected

format for that.

Once you have a custom template ready, you can refer to it from the command line.

Example with custom prompt

prodigy ner.openai.correct recipe-ner examples.jsonl --label "dish,ingredient,equipment" --prompt-path ./custom-ner.jinja2

Prompts for Textcat

Customising the prompt for text classification works in a similar fashion as the named entity variant but with a different template.

textcat.jinja2{% if labels|length == 1 %}

{% set label = labels[0] %}

From the text below, determine whether or not it contains a {{ label }}. If it is

a {{ label }}, answer "accept." If it is not a {{ label }}, answer "reject."

{% else %}

Classify the text below to any of the following labels: {{ labels|join(", ") }}

{% if exclusive_classes %}

The task is exclusive, so only choose one label from what I provided.

{% else %}

The task is non-exclusive, so you can provide more than one label as long as

they're comma-delimited. For example: Label1, Label2, Label3.

{% endif %}

{% endif %}

{# whitespace #}

Your answer should only be in the following format:

{# whitespace #}

answer:

reason:

{# whitespace #}

{% if examples %}

Below are some examples (only use these as a guide):

{# whitespace #}

{# whitespace #}

{% for example in examples %}

Text:

"""

{{ example.text }}

"""

{# whitespace #}

answer: {{ example.answer }}

reason: {{ example.reason }}

{% endfor %}

{% endif %}

{# whitespace #}

Here is the text that needs classification

{# whitespace #}

Text:

"""

{{text}}

"""

This template is more elaborate than the named entity one because this template makes a distinction between binary classification, multilabel classification and exclusive classification. Like before, you’re free to make any changes you like as long as you don’t change the names of the rendered variables and that you don’t change the structure of the prompt. The result from OpenAI will still need to get parsed and if OpenAI sends a response in an unexpected format the recipe won’t be able to render it.

Once you’ve created a custom template you can refer to it from the command line.

Example with custom prompt

prodigy textcat.openai.correct recipe-comments-textcat examples.jsonl --label "recipe,feedback,question" --prompt-path ./custom-textcat.jinja2

Prompts for Terms

If you’re interested in generating terms in another language then you may not need to write a seperate template. Instead you may also use a query that describes the language that you are interested in, like below.

Example

prodigy terms.openai.fetch "Dutch insults" dutch-insults.jsonl --n 100

Alternatively, if you prefer more control, you can also choose to write a custom template by making changes to the following jinja template:

terms.jinja2Generate me {{n}} examples of {{query}}.

Here are the examples:

{%- if examples -%}

{%- for example in examples -%}

{# whitespace #}

- {{example}}

{%- endfor -%}

{%- endif -%}

{# whitespace #}

-

The query variable is defined by the “query” argument from the command line

and the n variable is defined via --n. The examples in this template refer

to the --seeds that are passed along.

Once you’ve created a custom template you can refer to it from the command line.

Example

prodigy terms.openai.fetch "Dutch insults" dutch-insults.jsonl --n 100 --prompt-path ./custom-terms.jinja2