Task Routing

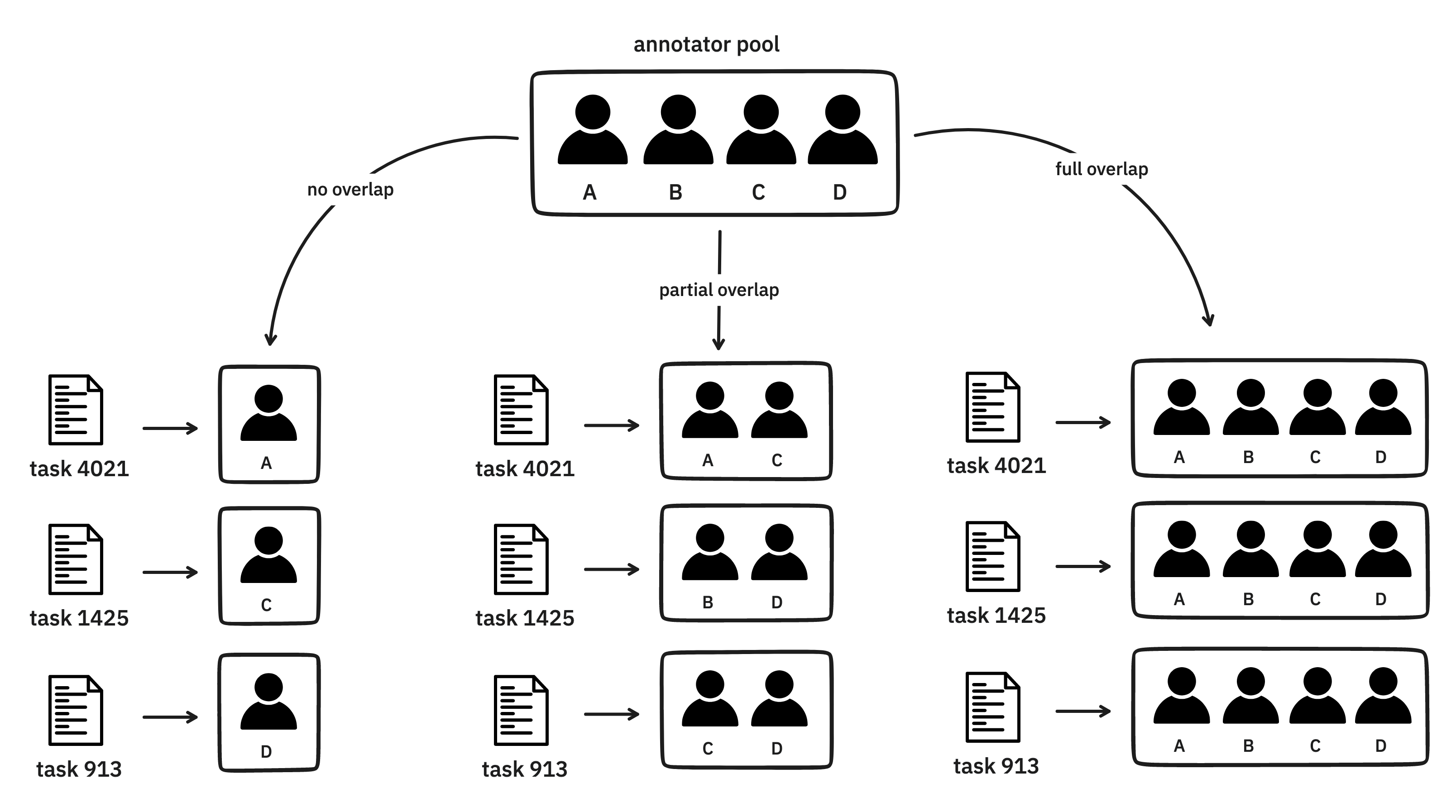

Prodigy allows you to distribute the annotation workload among multiple annotators or workers. You can make sure examples are seen by multiple annotators or even set up custom routing so that specific examples get seen by specific annotators.

Configure feed overlap

Prodigy provides a configuration file that allows you to provide a "feed_overlap" or an "annotations_per_task" setting to quickly define how tasks are routed between your pool of annotators.

These settings can be changed over time as your project evolves. High overlap can make sense early on in the project because it allows annotators to reflect on their disagreement. But eventually, you may prefer to have less overlap in favor of getting more annotations.

Read moreCustom routing mechanisms

Tasks can also be routed with custom Python code, which will allow you to fully customize who will annotate each example. Prodigy even offers some utilities to make ensure that annotators are assigned consistently.

Read moreRouter based on model confidencedef task_router_conf(ctrl: Controller, session_id: str, item: Dict) -> List[str]:

"""Route tasks based on the confidence of a model."""

all_annotators = ctrl.all_session_ids

confidence_score = custom_model(item['text'])

if confidence_score < 0.3:

# If the confidence is low, the example might be hard

# and then everyone needs to check

return all_annotators

# Otherwise just one person needs to check. We re-use the task_hash

# to ensure consistent routing of the task.

idx = item['_task_hash'] % len(all_annotators)

return all_annotators[idx]

Example

prodigyreviewreviews_finalreviews_2019,reviews2018

Review interface for binary text classification

Review annotators

Especially early in your machine learning project, it's important to review annotations between different annotators. You want to detect differences in interpretation or understanding of the annotation guidelines as early as possible and review annotator disagreements that also provide valuable feedback and allow you to update your annotation instructions.

Prodigy provides a review recipe to make this easy. It will present one or more versions of a given task, e.g. annotated in different sessions by different users, display them with the session information and allow the reviewer to correct or override the decision. It will render the differences between each annotator.

The same recipe can also be configured to automatically accept all the examples where there is no disagreement. To learn more, check the documentation.

Read more